What is Data Reengineering?

In any organization, data’s primary purpose is extracting insights from it. Insights drive the decisions of management for progressing the company. Since businesses started digitalizing rapidly, data generated from business applications has also snowballed.

With these changes happening to the way of doing business and data coming in various forms and volumes, many data applications have become outdated and hinder decision-making.

So, the process of changing existing data applications to accommodate the vast volume and variety of data at a rapid velocity is called Data Reengineering.

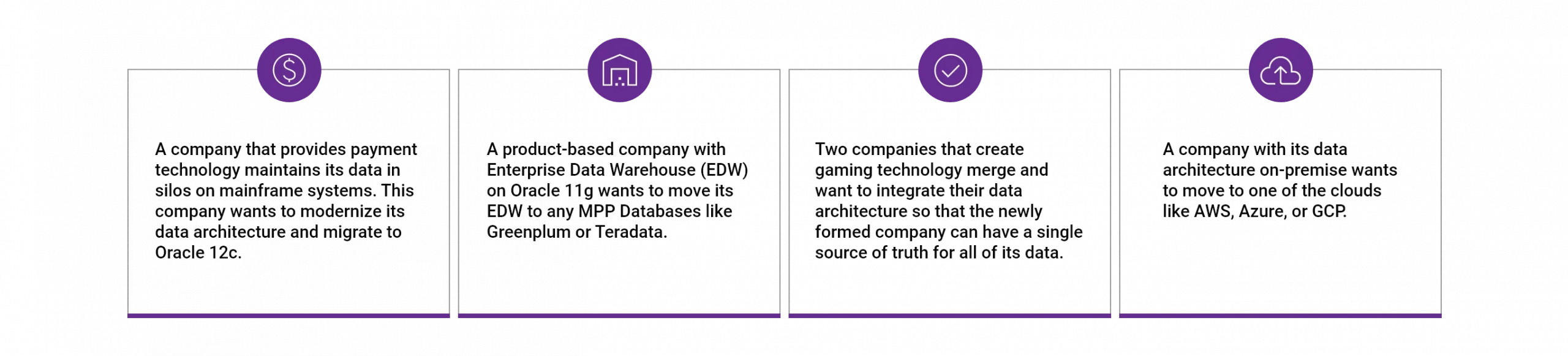

There can be several scenarios where we need to reengineer the existing application. Here are some:

Here’s how you can reengineer data:

Choose the Right Infrastructure Setup

This is an important decision that the engineering team has to make. Choosing the right infrastructure will make the newly reengineered application capable of storing and processing data more effectively than the legacy application.

AWS, Azure, and GCP provide Infrastructure-as-a-Service (IaaS) so that companies can dynamically scale up or down the configuration to meet the changing requirements automatically and are billed only for the services used.

For example, we have an Azure Data Factory pipeline that populates about 200 million records into Azure SQL DB configured to the standard service tier. We observed that inserts took a long time, and the pipeline ran for almost a day. The solution for this was to scale up the Azure SQL DB to the premium service tier and scale down when the load completes.

So, we configured the rest API in the pipelines to dynamically scale up to the premium tier before the load starts and scale down to the standard service tier once the load is completed.

Select the Right Technology

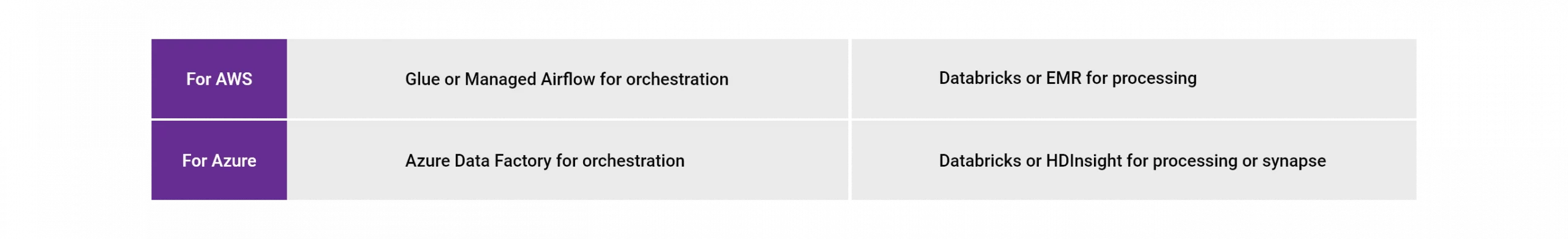

Technical software stack needs to be chosen based on the reengineering your company is doing. You can choose from various technologies based on the type and volume of data your organization processes. Below are some examples:

- If the change is from mainframe to other technology, you can choose Oracle on-premise or cloud. Here Informatica or similar tools can enable ingestion and orchestration, and Oracle’s in-house language PL/SQL can be used for the business logic.

- If the change is from on-premise to cloud, AWS, Azure, or GCP provide Software-as-a-Service (SaaS).

Design the Right Data Model

During this reengineering phase, you must determine how best the existing data model can accommodate the new types and volume of data flowing in.

Identify the functional and technical gaps and requirements. When you analyze and understand your data, it can result in one of two scenarios.

- You will identify new columns to be added to existing tables to provide additional value to the business.

- Identify new tables and relate them to the existing tables in the data model. Leverage these new tables to build reports that will help your business leaders to make more effective decisions.

Design the Right ETL/ELT Process

This process involves reconstructing the legacy code to be compatible with the chosen infrastructure, technology stack, and the redesigned data model.

To populate data to the changed data model, your development team needs to incorporate appropriate extract and load strategies so that data can flow schemas at a high velocity and users can access reports with less latency.

Designing the ETL/ELT is not just a code and complete job; You must track the development progress and versions of code properly. Create some information sources to track these, like the ones shown below:

- Milestone Tracker: The reengineering project needs to be split into development tasks, and these tasks can be tracked using any project management tool.

- Deployment Tracker: This can be used to track the physical changes of schema and code changes.

Once the development efforts are complete, plan a pilot phase to integrate all code changes and new code objects. Run end-to-end loads for both history and incremental loads to confirm that your code is not breaking in the load process.

This phase ensures data origination from existing and new sources is populated according to the business logic.

Every micro-frontend application must have a Continuous Delivery Pipeline (CDP), so it can be built and tested separately. It should also be able to get into production independently without any dependencies. Multiple smaller micro-frontend applications in the production can then be composed together into one large working application.

Once all the above steps are completed, you will get to Day Zero. Day Zero is when you take the reengineered solution live to production and do your sanity checks. If everything is working as expected, sunset the legacy solution. Now you can rest assured knowing that your data infrastructure empowers your leaders to make the right decisions on time and accelerate the growth of your business.